That is Bare Capitalism fundraising week. 826 donors have already invested in our efforts to fight corruption and predatory conduct, significantly within the monetary realm. Please be part of us and take part by way of our donation web page, which reveals the best way to give by way of verify, bank card, debit card, PayPal, Clover, or Clever. Examine why we’re doing this fundraiser, what we’ve completed within the final yr, and our present purpose, karōshi prevention.

By Lambert Strether of Corrente.

As readers have understood for a while, AI = BS. (By “AI” I imply “Generative AI,” as in ChatGPT and comparable initiatives based mostly on Giant Language Fashions (LLMs)). What readers could not know is that moreover being bullshit on the output aspect — the hallucinations, the delvish — AI can also be bullshit on the enter aspect, within the “prompts” “engineered” to trigger the AI generate that output. And but, we enable — we encourage — AI to make use of monumental and rising quantities of scarce electrical energy (to not point out water). It’s virtually as if AI is waste product all over!

On this very transient publish, I’ll first exhibit AI’s monumental energy (and water) consumption. Then I’ll outline “immediate engineering,” OpenAI’s technical documentation in some element. I’ll then present the similarities between immediate “engineering,” so-called, and the ritual incantations of historic magicians (although I suppose alchemists would have finished as nicely). I don’t imply “ritual incantations” as a metaphor (like Nice Runes) however as a good description of the particular course of used. I’ll conclude by questioning the worth of permitting Silicon Valley to make any society-wide capital funding selections in any respect. Now let’s flip to AI energy consumption.

AI Energy Consumption

From the Wall Road Journal, “Synthetic Intelligence’s ‘Insatiable’ Power Wants Not Sustainable, Arm CEO Says” (ARM being a chip design firm):

AI fashions corresponding to OpenAI’s ChatGPT “are simply insatiable by way of their thirst” for electrical energy, Haas stated in an interview. “The extra data they collect, the smarter [sic] they’re, however the extra data they collect to get smarter, the extra energy it takes.” With out larger effectivity, “by the top of the last decade, AI information facilities might devour as a lot as 20% to 25% of U.S. energy necessities. As we speak that’s most likely 4% or much less,” he stated. “That’s hardly very sustainable, to be sincere with you.”

From Forbes, “AI Energy Consumption: Quickly Changing into Mission-Vital“:

Huge Tech is spending tens of billions quarterly on AI accelerators, which has led to an exponential enhance in energy consumption. Over the previous few months, a number of forecasts and information factors reveal hovering information middle electrical energy demand, and surging energy consumption. The rise of generative AI and surging GPU shipments is inflicting information facilities to scale from tens of 1000’s to 100,000-plus accelerators, shifting the emphasis to energy as a mission-critical downside to unravel… The [International Energy Agency (IEA)] is projecting world electrical energy demand from AI, information facilities and crypto to rise to 800 TWh in 2026 in its base case situation, an almost 75% enhance from 460 TWh in 2022.

From the World Financial Discussion board,

AI requires vital computing energy, and generative AI methods may already use round 33 instances extra power to finish a job than task-specific software program would.

As these methods acquire traction and additional develop, coaching and working the fashions will drive an exponential enhance within the variety of information centres wanted globally – and related power use. It will put rising strain on already strained electrical grids.

Coaching generative AI, particularly, is extraordinarily power intensive and consumes rather more electrical energy than conventional data-centre actions. As one AI researcher stated, ‘While you deploy AI fashions, you must have them all the time on. ChatGPT is rarely off.’ General, the computational energy wanted for sustaining AI’s development is doubling roughly each 100 days.

And from the Soufan Heart, “The Power Politics of Synthetic Intelligence as Nice Energy Competitors Intensifies“:

Generative AI has emerged as probably the most energy-intensive applied sciences on the planet, drastically driving up the electrical energy consumption of knowledge facilities and chips…. The U.S. electrical grid is extraordinarily antiquated, with a lot of the infrastructure constructed within the Nineteen Sixties and Nineteen Seventies. Regardless of elements of the system being upgraded, the general growing old infrastructure is struggling to satisfy our electrical energy calls for–AI places much more strain on this demand. Thus, the necessity for a modernized grid powered by environment friendly and clear power is extra pressing than ever…. [T]he skill to energy these methods is now a matter of nationwide safety.

Translating, electrical energy goes to be more and more scarce, even when (if) we begin to modernize the grid. When push involves shove, the place do you suppose the facility will go? To your Grandma’s air conditioner in Phoenix, the place she’s sweltering at 116°F, or to OpenAI’s information facilities and coaching units? Particularly when “nationwide safety” is concerned?

AI Immediate “Engineering” Outlined and Exemplified

Wikipedia (sorry) defines immediate “engineering” as follows:

Immediate engineering is the method of structuring an instruction that may be interpreted and understood [sic] by a generative AI mannequin. : a immediate for a text-to-text language mannequin could be a question corresponding to “what’s Fermat’s little theorem?”, a command corresponding to “write a poem about leaves falling”, or an extended assertion together with context, directions, and dialog historical past.

(“[U]nderstood,” in fact, implies that the AI can suppose, which it can not.) A lot will depend on the how the immediate is written. OpenAI has “shared” technical documentation on this subject: “Immediate engineering.” Right here is the opening paragraph:

As you may see, I’ve helpfully underlined the weasel phrases: “Higher,” “generally,” and “we encourage experimentation” doesn’t give me any confidence that there’s any precise engineering occurring in any respect. (If we have been devising an engineering guide for constructing, nicely, an electrical energy producing plant, do you suppose that “we encourage experimentation” would seem in it? Then why wouldn’t it right here?)

Having not outlined its central subject, OpenAI then goes on to suggest “Six methods for getting higher outcomes” (no matter “higher” may imply). Right here’s one:

So, “fewer fabrications” is an appropriate consequence? For whom, precisely? Surgeons? Trial attorneys? Bomb squads? One other:

“Have a tendency” how usually? We don’t actually know, can we? One other:

Appropriate solutions not “reliably” however “extra reliably”? (Who do these folks suppose they’re? Boeing? “Doorways not falling off extra reliably” is meant to be exemplary?) And one other:

“Representive.” “Complete.” I suppose meaning hold stoking the mannequin ’til you get the end result the boss needs (or the consumer). And at last:

The thoughts reels.

The underside line right here is that the immediate engineer doesn’t understand how the immediate works, why any given immediate yields the end result that it does, doesn’t even know that AI works. In truth, the identical immediate doesn’t even give the identical outcomes every time! Stephen Wolfram explains:

[W]hen ChatGPT does one thing like write an essay what it’s primarily doing is simply asking time and again “given the textual content to this point, what ought to the following phrase be?”—and every time including a phrase.

Like glorified autocorrect, and everyone knows how good autocorrect is. Extra:

However, OK, at every step it will get an inventory of phrases with possibilities. However which one ought to it really decide so as to add to the essay (or no matter) that it’s writing? One may suppose it ought to be the “highest-ranked” phrase (i.e. the one to which the best “chance” was assigned). However that is the place a little bit of voodoo begins to creep in. As a result of for some cause—that possibly at some point we’ll have a scientific-style understanding of—if we all the time decide the highest-ranked phrase, we’ll usually get a really “flat” essay, that by no means appears to “present any creativity” (and even generally repeats phrase for phrase). But when generally (at random) we decide lower-ranked phrases, we get a “extra attention-grabbing” essay.

. And, in step with the concept of voodoo, there’s a selected so-called “temperature” parameter that determines how usually lower-ranked phrases might be used, and for essay era, it seems {that a} “temperature” of 0.8 appears greatest. (It’s value emphasizing that [whatever that means] [whose?].

This actually is bullshit. These individuals are like an ant pushing a crumb round till it randomly falls within the nest. The Hacker’s Dictionary has a time period that covers what Wolfram is exuding pleasure about, which covers immediate “engineering”:

voodoo programming: n.

[from George Bush Sr.’s “voodoo economics”]

1. The use by guess or cookbook of an obscureor bushy system, characteristic, or algorithm that one doesn’t actually perceive. The implication is that the approach could not work, and if it doesn’t, one won’t ever know why. Nearly synonymous with black magic, besides that black magic usually isn’t documented and no person understands it. Evaluate magic, deep magic, heavy wizardry, rain dance, cargo cult programming, wave a lifeless hen, SCSI voodoo.

2. Issues programmers try this they know shouldn’t work however they fight anyway, and which generally really work, corresponding to recompiling every thing.

I relaxation my case.

AI “Immediate” Engineering as Ritual Incantation

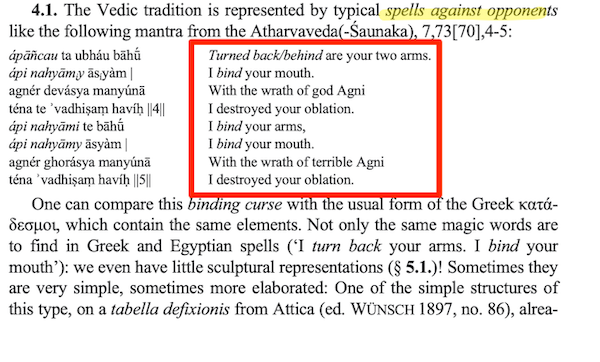

From Velizar Sadovski (PDF), “Ritual Spells and Sensible Magic for Benediction and Malediction: From India to Greece, Rome, and Past (Speech and Efficiency in Veda and Avesta, I.)”, right here is an instance of an “Outdated Indian” Vedic ritual incantation (c. 900 BCE):

The textual content boxed in crimson is a immediate — pure language textual content describing the duty — albeit addressed to a being even much less scrutable than a Giant Language Mannequin. The anticipated consequence is confusion to an enemy. Like OpenAI’s ritual incantations, we don’t know why the immediate works, the way it works, and even that it really works. And as Wolfram explains, the result could also be completely different every time. Hilariously, one can think about the Vedic “engineer” tweaking their immediate: “two arms” provides higher outcomes than simply “arms,” binding the arms first, then the mouth works higher; repeating the bindings twice works even higher, and so forth. And naturally you’ve obtained to ask the best divine being (Agni, on this case), so there’s numerous skilled ability concerned. Little question the Vedic engineer feels free to provide you with “artistic concepts”!

Conclusion

The AI bubble — tempo Goldman — appears removed from being popped. AI’s ritual incantations are presently being chanted in medical information, native information, eligibility willpower, delivery, and spookdom, to not point out the Pentagon (these Beltway bandits know a great suppose once they see it). However the AI juice needs to be well worth the squeeze. Cory Doctorow explains the economics:

Ultimately, the business must uncover some mixture of purposes that can cowl its working prices, if solely to maintain the lights on within the face of investor disillusionment (this isn’t non-obligatory – investor disillusionment is an inevitable a part of each bubble).

Now, there are many low-stakes purposes for AI that may run simply high-quality on the present AI know-how, regardless of its many – and seemingly inescapable – errors (“hallucinations”). Individuals who use AI to generate illustrations of their D&D characters engaged in epic adventures from their earlier gaming session don’t care in regards to the odd further finger. If the chatbot powering a vacationer’s automated text-to-translation-to-speech cellphone software will get a number of phrases improper, it’s nonetheless significantly better than the choice of talking slowly and loudly in your individual language whereas making emphatic hand-gestures.

There are many these purposes, and most of the individuals who profit from them would likely pay one thing for them. The issue – from an AI firm’s perspective – is that these aren’t simply low-stakes, they’re additionally low-value. Their customers would pay one thing for them, however not very a lot.

For AI to maintain its servers on by the approaching trough of disillusionment, it must find high-value purposes, too. Economically talking, the operate of low-value purposes is to absorb extra capability and produce worth on the margins after the high-value purposes pay the payments. Low-value purposes are a side-dish, just like the coach seats on an airplane whose whole working bills are paid by the enterprise class passengers up entrance. With out the principal revenue from high-value purposes, the servers shut down, and the low-value purposes disappear:

Now, there are many high-value purposes the AI business has recognized for its merchandise. Broadly talking, these high-value purposes share the identical downside: they’re all high-stakes, which implies they’re very delicate to errors. Errors made by apps that produce code, drive vehicles, or establish cancerous plenty on chest X-rays are extraordinarily consequential.

However why would anyone construct a “excessive stakes” product on a know-how that’s pushed by ritual incantations? Airbus, for instance, doesn’t embrace “Fortunate Rabbit’s Foot” as a line merchandise for a “totally loaded” A350, do they?

There’s a lot silly cash sloshing about that we don’t know what do with it. Couldn’t we give consideration to the concept of placing capital allocation underneath some type of democratic management? As a result of the tech bros and VCs appear to be doing a very unhealthy job. Perhaps we might even do higher than powwering your Grandma’s air conditioner.